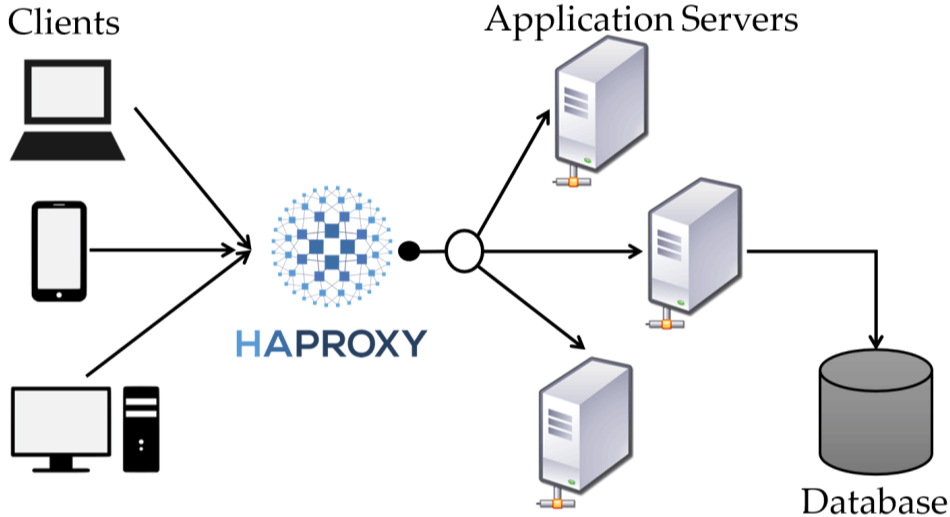

Why we need network load balancer?

As the name suggest, load balancer is a way to maintain network traffic equilibrium across available application or web servers. Generally, application/web servers alone are not robust enough to handle extreme network traffic during busy times of the year.

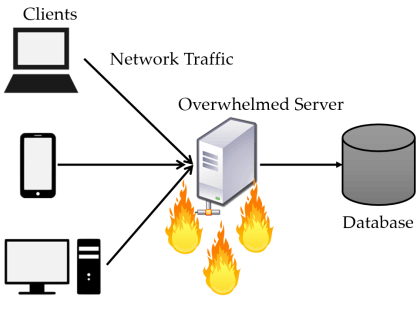

Moreover, for some reason if the server goes down end user would no longer be able to access

the application. Load balancer brings flexibility, reliability, and availability. It is

considered as an essential component for any simple or complex, normal or enterprise web

application.