Traps and Tips - Test Driven Development

Before I begin, kudos to James Carr's blog post on

Test Driven Development (TDD) antipatterns

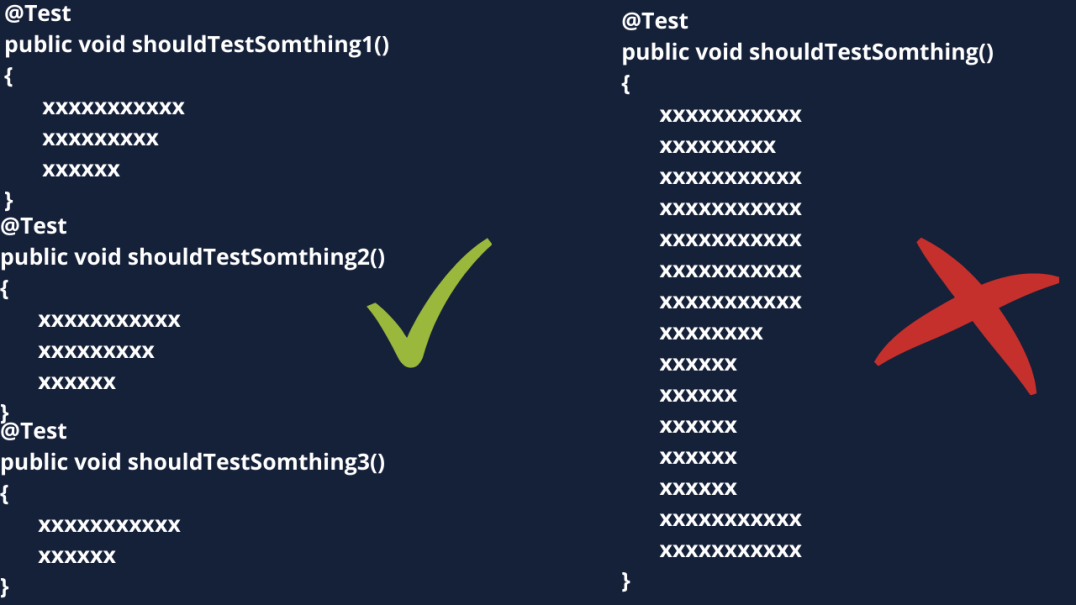

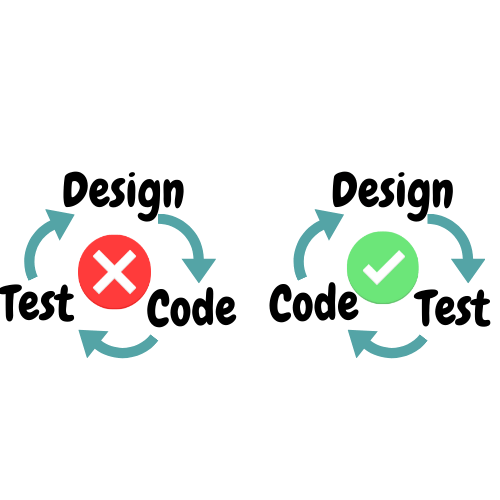

which is used as jumping-off point for this blog!. TDD is considered as one of the most

important advancement in software development life cycle. Most of my teachers and seniors

used to explain TDD as lifesaving medicine for any software. However, just like any other

medicine TDD has its own pitfalls and side effects which may muddle any software's health.

Generally, this happens when your software is not taking medicines at timely manner,

although TDD is widely practiced in all sorts of industries with all sorts of different

programming languages (i.e. functional, non-functional, object oriented, imperative,

declarative etc.) Sadly, it is not the starting point of every team! Perhaps, it is one of

the reasons that TDD hasn't become so effective.